A typical approach to building an LLM agent is to attach a powerful general-purpose model, such as GPT-4, to a few functions. But a system of LLM agents – achieved by orchestrating an array of models together – can vastly outperform this approach. Each model is an expert in a specific task. The models are integrated together either vertically –- where the output of one model is passed to another – or horizontally – where output of multiple models are combined to reach one resolution.

The “generic LLMs” wave of jack-of-all-trades, master-of-none models like GPT and Mistral is here, and will be here for a while longer. But I believe that the next major wave of innovation will come from task-specific LLM-based systems that connect and orchestrate multiple expert models and techniques to achieve a certain goal.

At Checksum, we generate end-to-end tests using AI models trained on real user sessions. Building our own LLM agent system is a first-class priority; this is a core part of how our product works. Initially, we approached the problem by training a main LLM model. But we realized that when we approached the problem from an LLM system perspective, where different models work together, we could go much further and faster.

What is a System of LLM Agents

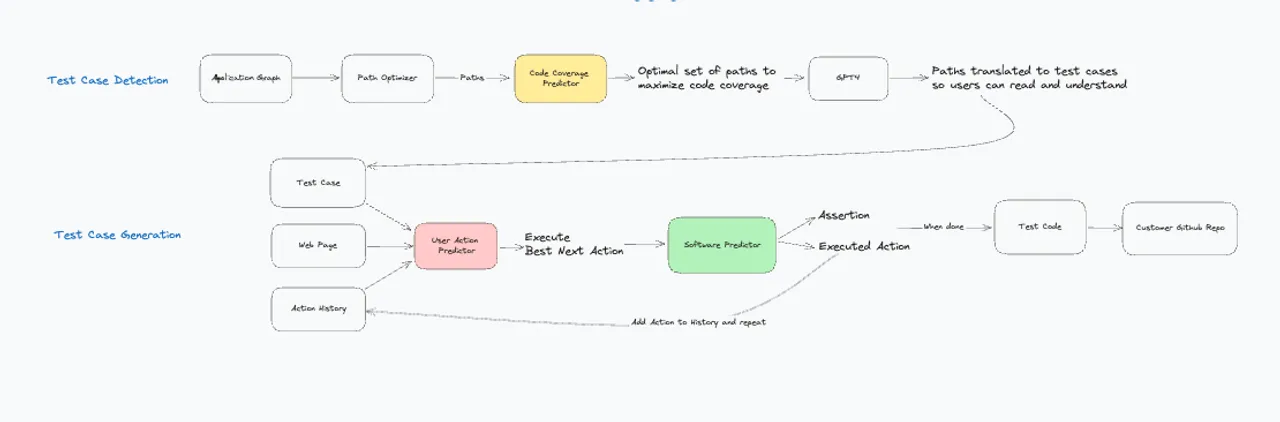

A little background on how our product works: our customers integrate a JavaScript SDK into their HTML, and Checksum collects anonymized data about usage patterns. We then use this data to train a model that can do three things very well:

- Detect test flows based on real usage patterns - happy path and edge cases.

- Execute test flows on a live environment and generate Playwright/Cypress code.

- Maintain the tests as they are run, so the testing suite constantly updates as your software changes.

LLM agents, which are LLMs that connect to different functions and can execute code, are relatively well established. But our insight goes deeper than that.

At Checksum, we don’t train a single LLM model that generates the full test. Our solution is an array of models all composed into a system of models, with the goal of executing flows on the web and converting them to end-to-end test code. And the entire system is orchestrated by our main Checksum LLM, which is the heaviest of the bunch.

A System Models Translates to Higher Overall Accuracy

A common position in Deep Learning is that training models on the end to end task will yield superior results. In our world, where we focus on E2E testing, that would mean training a model to take the full HTML of the page and return a set of actions to execute.

In our experience, this approach is not the best approach – at least for now.

The main factors at play are:

- The size of these models (some of them are huge).

- The cost to train them (which can be extremely expensive).

- The way they work (by predicting the next token, one after another).

Instead, we’ve taken the approach of using smaller models in conjunction with LLMs, which has yielded numerous performance and cost benefits. Here are a few reasons why:

Faster Iteration == Accuracy

When building a task-specific model, not every task requires the size and complexity of a 70B parameter LLM. Training these models is expensive and time consuming – which is time not spent on other experiments.. It’s far better to use LLMs for the hairiest, most complex part of your flow and train simpler models for the simpler, more straightforward parts. Generating E2E tests is a huge problem, and we approach it by attacking its smaller component problems – and matching the right models to each part. We focus our engineering resources on the toughest bits, and train quick models or use heuristics on the easier parts of the pipeline.

Inference Speed == Accuracy

When building a task specific model, often a faster model means a more accurate one. Why? Because of the unique nature of LLMs.

Usually with LLMs, the more output it produces, the more accurate the results. Using Chain of Thought or splitting the inference to multiple subsequent prompts has proven over many studies to improve an LLM’s reasoning. However, in the real-world, inference time matters. A faster model lets you prompt the model more. And we’ve found that the more prompting and output you have, the more accurate the LLM responses are. So speed is important in itself, but also because it enables better accuracy. If you can delegate simpler tasks to faster models, you can extract more juice from your main model.

Narrower World == Accuracy

We’ve found that LLMs struggle with very open-ended tasks, like completing a flow in a complex enterprise web app. Again, this is a result of the nature of LLMs which have a tendency to hallucinate and be overconfident. Narrowing the LLM options improves accuracy and reduces hallucinations. At Checksum we use lighter models that are trained on more data to narrow the option set to K Next Candidates. As a result, the LLM is much more likely to be correct, as long as the correct answer is in the K Next Candidates.

Confidence Score == Accuracy

It’s very hard to gauge a true confidence score out of an LLM response. Yes, you can measure the logprobs, but since the probability of each token is derived from the previous generated token, the result might not reflect the real confidence. Using smaller classifier models allows you to get a confidence score, which in a system controlled by an LLM, can affect the decision making process. For example, with lower confidence, you can use a more “expensive” model – or you can train models that are better at making a prediction in ambiguous situations.

Final Thoughts

Assuming unlimited data and compute power, a huge, all-knowing model may outperform a pipeline of smaller models. But we’ve found that training smaller models allows us to compartmentalize the problem and better control our destiny. We will share more about the details of our models in upcoming posts, but in the meantime, here are a few articles that gave us inspiration:

- Pix2Struct

- OS-Copilot: Towards Generalist Computer Agents with Self-Improvement

- CoVA: Context-aware Visual Attention for Webpage Information Extraction

If you want to see our model in action, feel free to book a demo with us.

About The Author

Gal Vered is a Co-Founder at Checksum where they use AI to generate end-to-end Cypress and Playwright tests, so that dev teams know that their product is thoroughly tested and shipped bug free, without the need to manually write or maintain tests.

In his role, Gal helped many teams build their testing infrastructure, solve typical (and not so typical) testing challenges and deploy AI to move fast and ship high quality software.