At Checksum.ai, we build a lot of agents. Like most teams, we default to JSON for structured output — it's easy to parse, well-supported, and gets the job done.

But for coding tasks specifically, we started questioning this choice. Code in the wild isn't JSON. When an LLM writes or edits code, it's producing something that naturally fits in markdown code blocks or even XML tags. We wondered: are we handicapping our agents by forcing everything through JSON?

So we ran a quick experiment to find out.

Experiment Setup

We created 30 tasks across three categories:

- Story Writing (10 tasks): Creative prompts like "a robot discovering emotions" or "a time traveler preventing their own birth"

- Coding (10 tasks): Implementations including LRU cache, binary search tree, trie, topological sort, and math expression parser

- Bug Fixing (10 tasks): Given buggy code, output find/replace operations to fix identified issues

Each task was run with three different output format requirements: JSON, XML, and Markdown. That's 90 total runs.

Models used:

- Task execution: Claude Haiku 4.5

- Quality judgment: Claude Sonnet 4.5 with extended thinking

For coding and bug fix tasks, we also ran actual tests to verify the code worked.

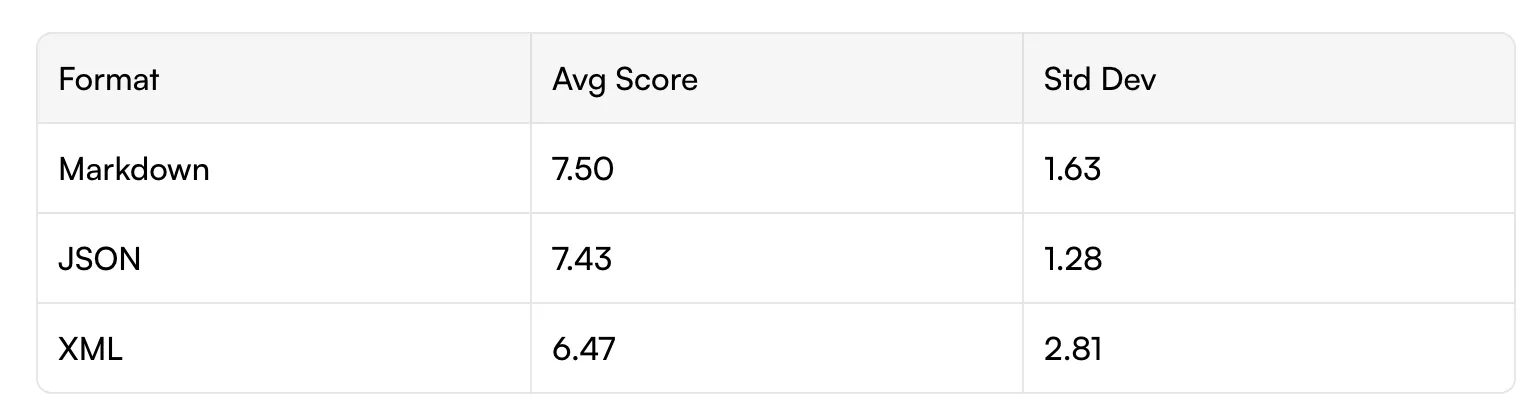

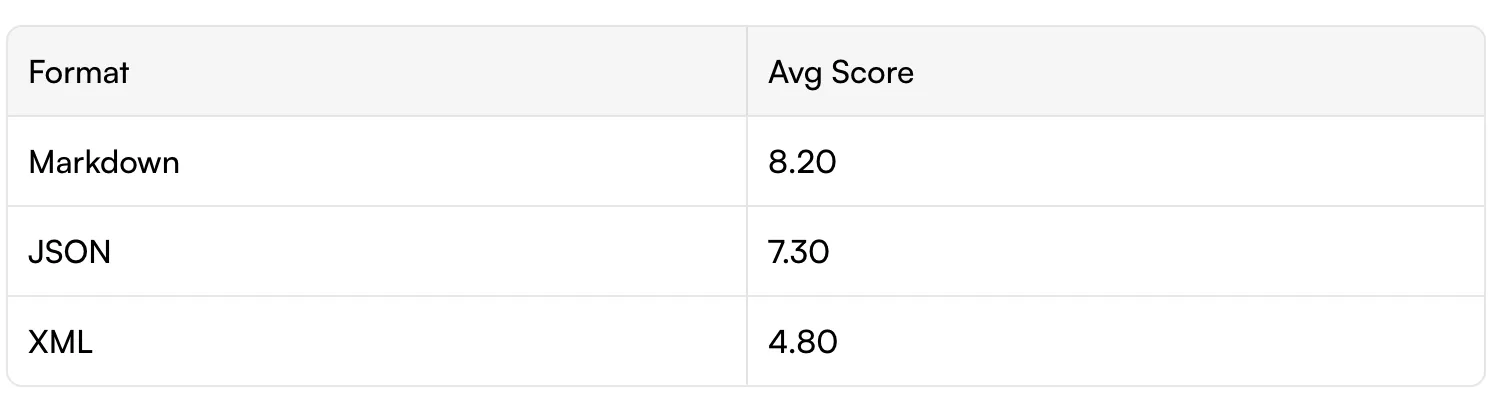

Overall Results

The formats performed more similarly than we expected. Markdown and JSON are essentially tied, with XML trailing behind and showing higher variance.

Results by Task Type

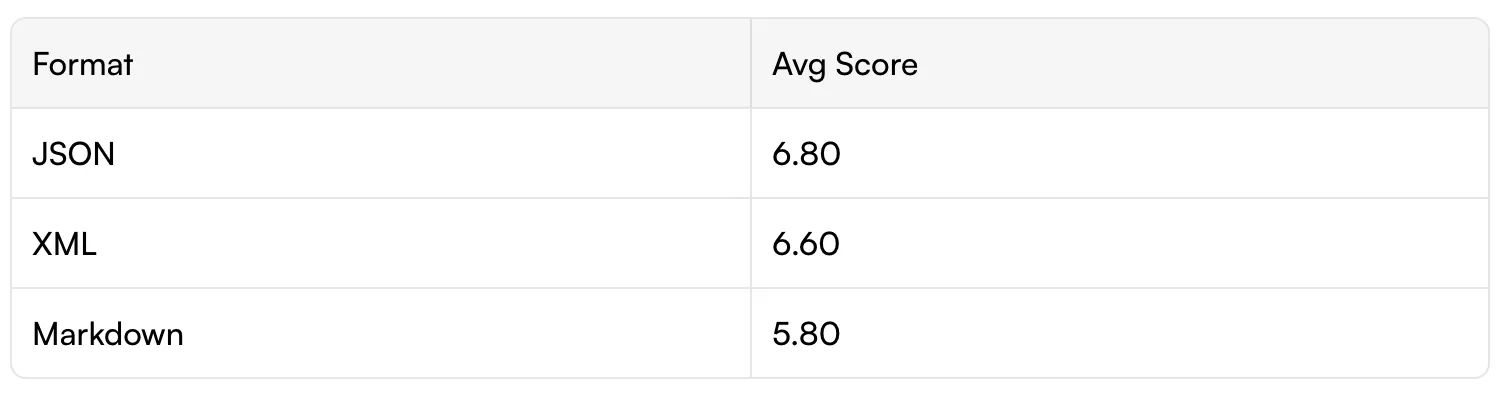

Story Writing

We expected Markdown to shine here — it's the natural format for prose. Instead, JSON performed best. The structured format (title, paragraphs with titles, sentences as arrays) may have provided helpful scaffolding for narrative organization.

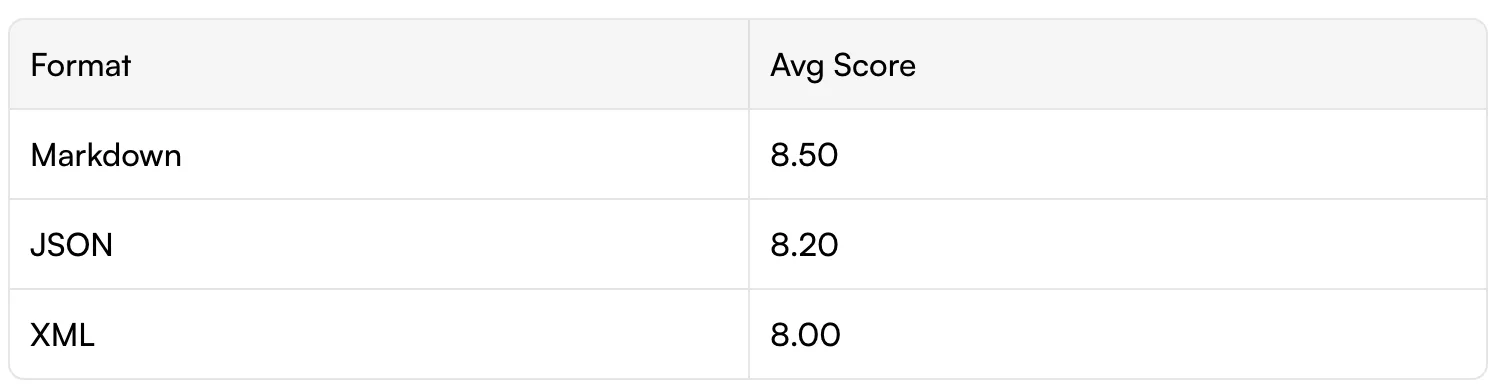

Coding

All formats performed well and consistently. The model produced working code regardless of the output wrapper. Test pass rates were similar across the board.

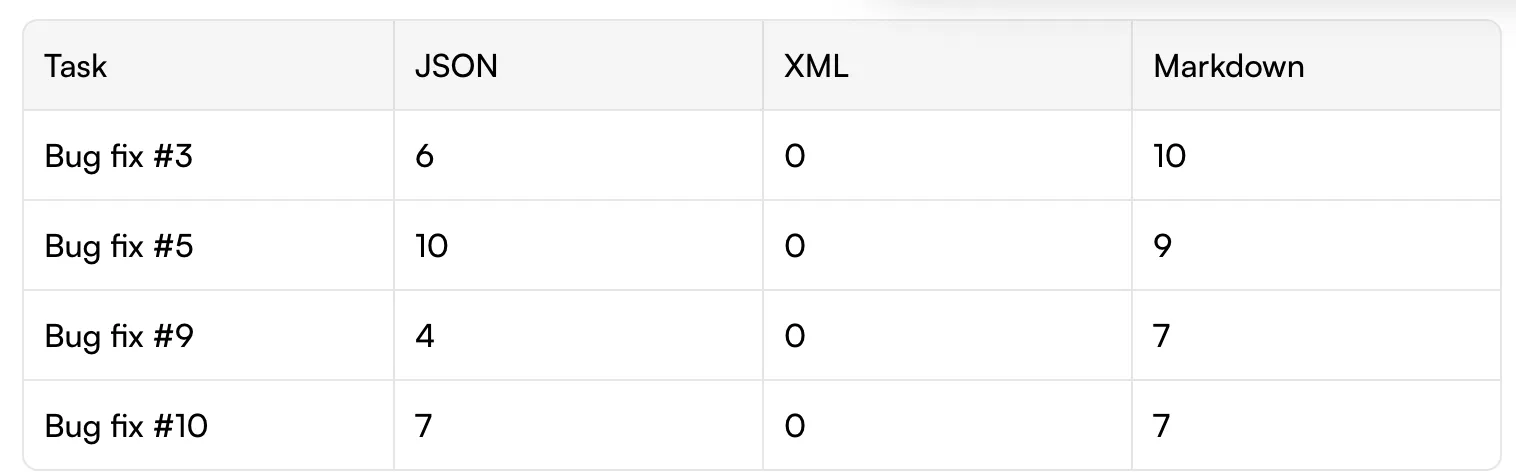

Bug Fixing

Bug fixing showed the biggest format impact. This task required outputting precise find/replace pairs — exact text matches to locate and replace buggy code.

The XML Bug Fix Struggle

XML struggled significantly with bug fix tasks. The model had difficulty producing accurate find/replace patterns when constrained to XML structure.

Looking at individual tasks, the variance was striking:

Same task, same model, same underlying prompt — scores swinging from 0 to 10 based on output format. The model simply couldn't express precise code edits reliably in XML structure.

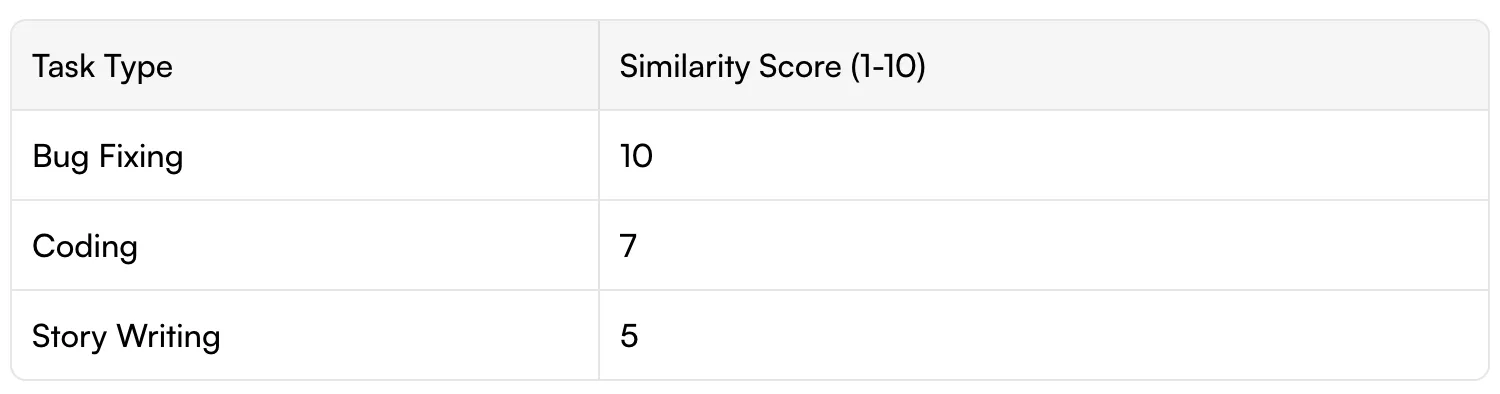

Content Similarity: Same Thinking, Different Wrapper?

We used Claude Sonnet with extended thinking to compare outputs across formats for the same task. The question: does the format change what the model actually produces, or just how it's wrapped?

Bug Fixing (10/10): Identical solutions across formats. The model identified the same bugs and produced the same fixes — the format was purely presentational (when it worked).

Coding (7/10): Same algorithms and approaches, but implementation details varied. For example, the LRU cache produced OrderedDict-based solutions in JSON/Markdown but a manual doubly-linked list in XML. Same complexity, different code.

Story Writing (5/10): Same premise and themes, but genuinely different stories. Different plot developments, different character arcs, different resolutions. The format constraint pushed the model into different creative directions.

Practical Recommendations

The bottom line: format choice is not a big deal for most tasks.

The differences we observed were smaller than we expected. JSON and Markdown performed nearly identically overall. XML lagged behind, but mainly on one specific task type.

Our recommendation: default to whatever format is easiest to parse in your stack. If you're in JavaScript, JSON is natural. If you're rendering to users, Markdown works great. Don't overthink it.

Could you test your specific use case? Sure. But optimizing output format is not low-hanging fruit. There are better places to spend your time — prompt engineering, task decomposition, or model selection will likely have bigger impact than switching from JSON to Markdown.

Conclusion

We went into this experiment wondering if we should switch away from JSON for coding tasks. The answer: probably not worth it.

Format matters less than we thought. Pick based on parsing convenience, not performance hopes. JSON and Markdown are both solid defaults that will serve you well.

The one caveat: if you're doing precise text manipulation tasks (like find/replace), you might want to avoid XML. But for most use cases, just pick what's easiest to work with and move on.

Experiment run with Claude Haiku 4.5 for task execution and Claude Sonnet 4.5 for evaluation. 30 tasks, 90 total runs.